Verification is no longer a compliance step or an onboarding formality but an operational infrastructure. Every lending decision - whether instant, assisted, or post-approval depends on the reliability, sequencing, and interpretability of verification outcomes.

Yet most lending enterprises still operate verification as a fragmented collection of checks. Identity is verified one way during onboarding, income another way when underwriting, employment in yet another manner during disbursal and all of it is reconstructed again during audits, disputes, or investigations.

High-volume lending systems do not fail verification because they lack data sources, vendors, or checks. They fail because verification decisions cannot be re-executed, re-explained, or re-defended once volume, time, and exceptions enter the system.

At scale, this approach breaks.

As application volumes grow, product portfolios expand, and regulatory scrutiny intensifies, lenders face a structural challenge: how to design verification workflows that remain resilient under load, adaptable across journeys, and defensible months or years after the decision was made.

At this point, verification is no longer a tooling problem. It is an architectural one.

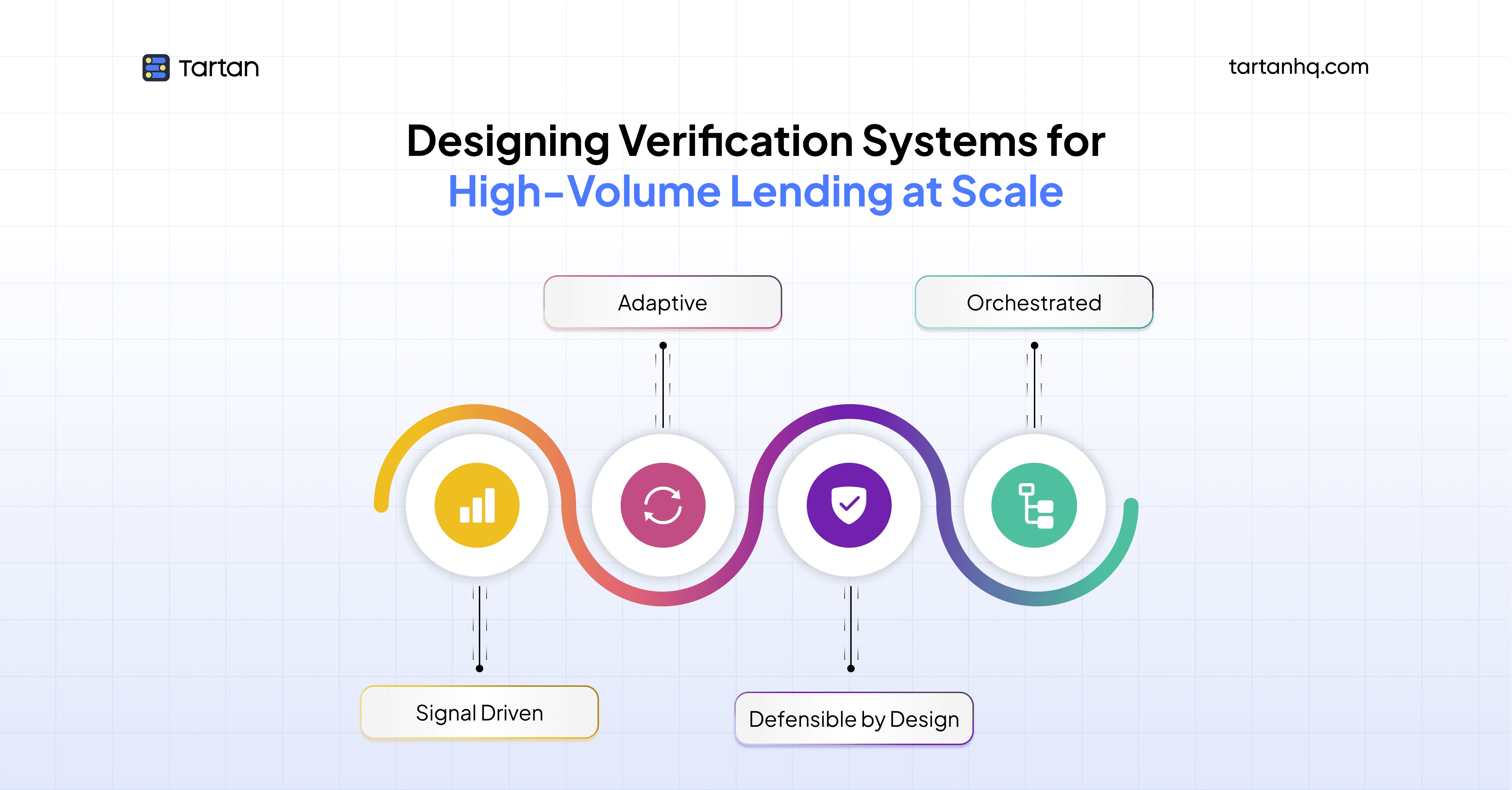

High-volume lending needs verification systems that coordinate signals, adapt when data is incomplete, handle exceptions in a controlled way, and retain clear evidence long after a decision is made. This requires deliberate design across orchestration, fallbacks, exception handling, and audit-grade evidence treated as core infrastructure, not operational afterthoughts.

How Verification Logic Constrains Lending Throughput

Verification rarely fails loudly in lending systems. It degrades quietly.

Approval rates start fluctuating without clear explanation. Disbursal timelines stretch even though no single step appears slow in isolation. Exception queues grow, but only for certain products, partners, or geographies. Risk teams notice inconsistencies across cohorts, but cannot trace them back to a single control failure.

These attributes typically point to the same underlying issue: verification logic has become embedded inside journeys rather than governed as a shared system.

In many lending stacks, verification decisions are hard-coded into onboarding flows, underwriting scripts, or partner-specific integrations. Each journey optimizes for its own success metrics - conversion, speed, or fraud reduction using locally scoped logic. Over time, this creates multiple definitions of verification sufficiency inside the same enterprise.

As a result:

Verification breaks between onboarding and underwriting

Income signals are not interpreted uniformly

Exception resolution lacks a single standard

Central policies do not translate into consistent execution

At scale, this misalignment is not just inefficient, it becomes operational risk. When decision logic is scattered across journeys, changes to policy require coordinated code updates, vendor reconfigurations, and retraining across teams.

Verification must therefore move out of individual flows and into an orchestrated layer where sequencing, thresholds, and escalation rules are applied consistently, regardless of how or where the customer enters the system.

Reframing Verification: From Checks to Orchestration

Verification orchestration refers to the ability to design, execute, and govern verification workflows as coordinated systems rather than isolated API calls.

Across large-scale lending platforms, verification is implemented as a set of point interactions: call an identity API, fetch an income document, run a bureau check, route failures to a manual queue.

An orchestrated model treats verification as a decision system.

It introduces control over when checks are triggered, why they are required, and how their outcomes affect the overall confidence in a customer profile. Rather than executing all checks by default, orchestration enables verification to progress based on the quality, consistency, and sufficiency of signals already available.

In an orchestrated verification framework:

Verification steps are sequenced intentionally

Decisions adapt dynamically based on signal quality

Fallbacks are triggered automatically when primary checks fail

Exceptions are handled systematically, not manually

Evidence is captured continuously and preserved contextually

This shift is structural, not superficial. Without orchestration, verification scales by adding vendors, rules, and manual effort driving up cost, complexity, and inconsistency. With orchestration, verification scales through control: predictable workflows, measurable outcomes, and aligned execution across products and channels.

For high-volume lending enterprises, orchestration is what separates verification that merely completes checks from verification that reliably supports growth.

Orchestration in High-Volume Lending Workflows

In high-volume lending, verification performance is driven by how checks are sequenced and governed. Data gaps, vendor downtime, and SLA pressure are constant. Orchestration defines whether the system responds predictably or relies on manual workarounds.

Without orchestration, verification workflows default to static sequences every applicant routed through the same steps, regardless of signal strength or risk profile. It introduces structure, adaptability, and governance into verification execution.

Operational Components of Verification Orchestration

Signal-First Workflow Design

Orchestrated workflows prioritize what needs to be known over which check is run. Identity confidence, employment continuity, income stability, and address credibility are treated as signals that can be satisfied through multiple sources or methods.

Instead of binding verification logic to a specific vendor or data pull, orchestration evaluates whether a signal has reached a defined confidence threshold. Once met, downstream checks that add marginal value are avoided. This prevents over-verification while maintaining risk discipline.

This means:

Strong signals reduce workflow depth

Weak or conflicting signals trigger targeted escalation

Vendor choice becomes an implementation detail, not a control dependency

It allows lending teams to improve decision quality without continuously expanding their verification stack.

Conditional Sequencing Based on Confidence

Static verification flows assume uniform uncertainty. High-volume lending does not operate that way.

Orchestration enables workflows to adapt in real time based on signal quality and consistency. When early checks establish high confidence, later steps are either simplified or skipped. When uncertainty persists, additional verification is introduced deliberately not as a default requirement.

This conditional sequencing:

Preserves straight-through processing for low-risk profiles

Contains operational load by limiting unnecessary checks

Ensures escalations are driven by data gaps, not blanket rules

Speed is achieved without relaxing control because both are driven by a single decision framework.

Single Decision Logic for Speed and Control

Verification rules are implemented inside individual workflows rather than governed centrally. Each product, channel, or partner flow ends up enforcing policy slightly differently based on local constraints, historical decisions, or implementation shortcuts.

This creates multiple interpretations of the same risk policy operating simultaneously within the same organization.

This leads to:

Different verification thresholds across products for the same customer profile

Journey-specific overrides that bypass standard controls

Policy changes requiring multiple code and vendor updates

Limited visibility into how consistently risk rules are enforced

When verification logic is distributed across journeys, control degrades as volume and product complexity increase. This disconnect makes outcomes harder to predict, harder to audit, and harder to correct without disrupting live operations. Centralizing verification logic separates policy definition from journey execution, allowing lending teams to scale products and channels without multiplying risk pathways.

Audit Trails: From Static Artifacts to Living Evidence

Audit readiness in lending is often misunderstood as a storage problem. If documents, screenshots, and API responses are archived somewhere, the assumption is that decisions can be defended later.

Audits, investigations, and regulatory reviews rarely question whether a check was performed. They examine whether the decision logic was sound, consistently applied, and appropriate given the information available at that point in time. Static artifacts do not answer those questions. They capture outcomes, not reasoning.

As lending operations scale, the gap between what is stored and what must be explained becomes increasingly visible.

Why Traditional Audit Trails Break Down

Most lending systems were not designed to preserve verification context. They store what was convenient to save at the time of execution, not what would be required months or years later.

Common limitations include:

Outcome-only records: Verification is stored as pass/fail results, without showing how confidence was formed or changed.

Missing timing context: Records don’t show when data was sourced, making it hard to judge freshness at decision time.

Fragmented evidence: Identity, income, and bureau checks live in separate systems, breaking the decision trail.

Untracked fallbacks and overrides: Alternative checks and manual interventions are rarely captured in a clear, reviewable form.

During audits, teams are forced to manually reconstruct decisions from logs, dashboards, emails, and analyst notes, resulting in high effort, weak defensibility, and inconsistent explanations.

Living Evidence as Defensible Decision Proof

Living evidence is an execution output, not a reporting construct. Well-designed verification workflows automatically preserve decision context - what was evaluated, why it was sufficient, and how confidence evolved without requiring later interpretation.

Audit-grade evidence preserves the reasoning, timing, and controls behind every verification decision.

It preserves:

Raw signals, source attribution, and confidence indicators are retained alongside final decisions.

Rules, thresholds, and sequencing applied during verification are versioned and linked to each decision.

Alternative data sources, manual interventions, and overrides are captured with explicit rationale, not inferred later.

Evidence remains aligned across onboarding, underwriting, disbursal, servicing, and claims or collections.

Audit-grade evidence extends beyond regulatory compliance and directly strengthens operational resilience and risk governance.

When verification trails are clear and complete, teams can review decisions consistently, evaluate policy changes accurately, and resolve disputes faster. Accountability across product, risk, and operations becomes explicit. Weak audit trails, by contrast, depend on assumed process integrity rather than provable execution, an approach that does not hold at scale.

The Strategic Shift: Verification as a Competitive Advantage

As lending operations scale and diversify, speed and access alone stop being decisive.

Most lenders can digitize onboarding and compress turnaround times. The real differentiators now sit deeper in the stack: how consistently risk policies are enforced, how efficiently operations scale under load, and how confidently decisions can be defended under regulatory or audit scrutiny.

Verification architecture sits at the intersection of all three.

Enterprises that adopt a modular, orchestrated verification layer gain tangible advantages:

Faster product and journey launches, with verification logic reused across products and journeys

More stable operations at scale, through governed fallbacks and exception handling

Lower audit and compliance effort, with evidence captured during execution

Consistent risk enforcement, across channels, partners, and lifecycle stages

HyperVerify is designed to support this operating model. By separating verification intent from journey execution, it allows lending enterprises to evolve products and channels without fragmenting control.

Orchestration, fallback governance, exception handling, and audit-grade evidence are built into the same layer, ensuring that scale does not come at the cost of defensibility or discipline.

Verification Architecture Built for Scale

Verification workflows that perform adequately at lower volumes often degrade sharply as application counts increase, product lines expand, and decision timelines compress.

At higher volumes, verification failures are rarely dramatic. They surface as slower disbursals, inconsistent decisions across similar profiles, rising exception rates, and growing effort to explain outcomes after the fact.

Designing verification for scale therefore requires a structural shift in how verification is conceived and executed:

From isolated checks to orchestrated decision systems

From reactive fallbacks to explicit, governed pathways

from manual exception handling to structured resolution logic

From static records to execution-linked evidence

This shift is not about adding more controls. It is about ensuring that control remains intact as complexity increases.

HyperVerify functions as the verification layer for this operating model. It functions as a verification infrastructure layer rather than a point solution, separating verification intent from journey execution, coordinating signals across workflows, and preserving audit-grade evidence as decisions are made.

For CXOs planning the next phase of growth, the question is no longer whether verification must scale. The more critical question is whether the architecture can handle scale without increasing risk, compliance exposure, or cost.

Tartan helps teams integrate, enrich, and validate critical customer data across workflows, not as a one-off step but as an infrastructure layer.