In the insurance industry, data is more than information.

It is the foundation of pricing, the lens for risk assessment, the trigger for claims decisions, and the evidence base for regulatory defense.

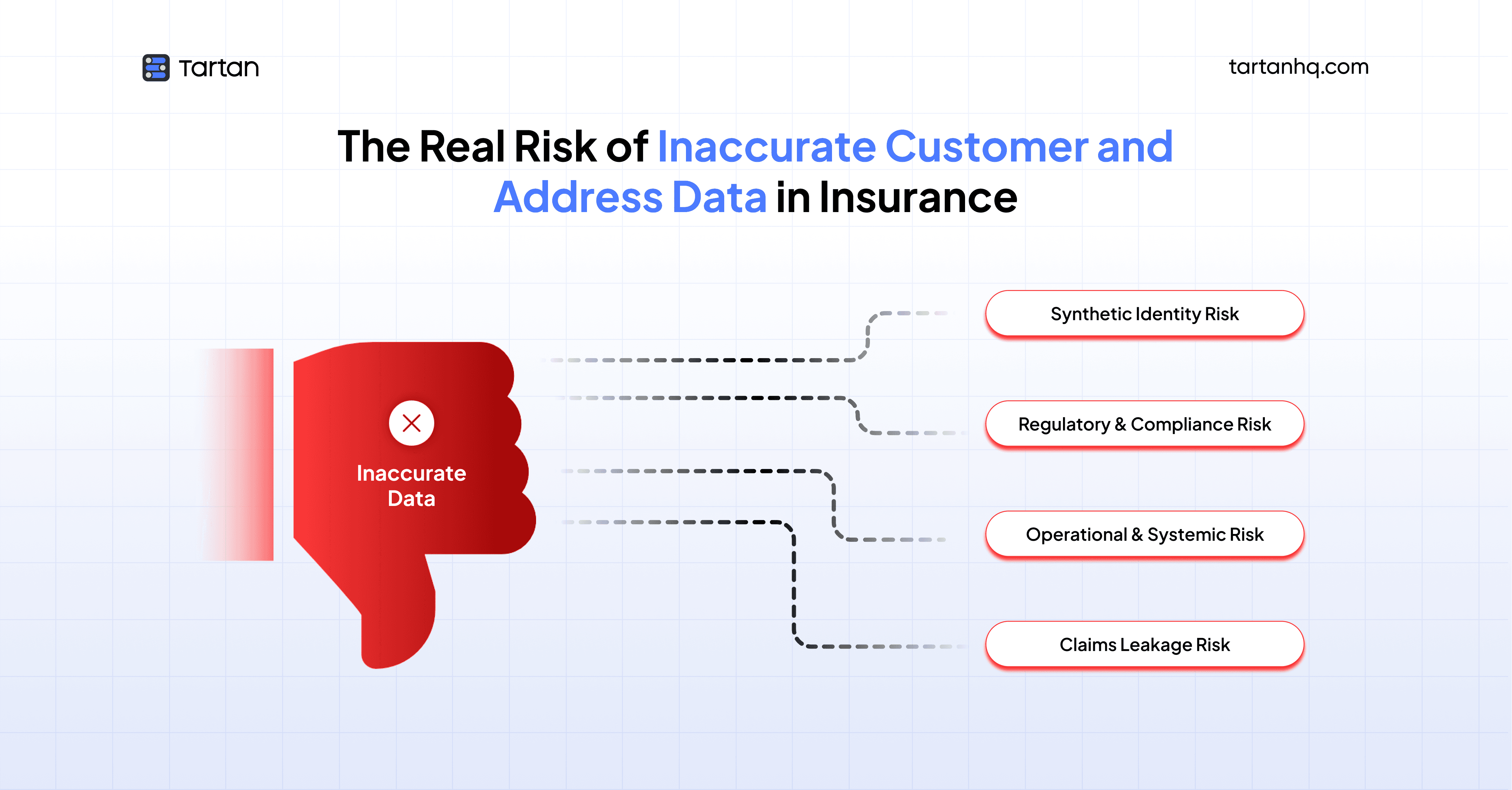

For B2B companies providing technological solutions to the insurance sector, understanding this "siloed data" problem is no longer optional. It is a multibillion-dollar vulnerability. When a policy is written with an unverified address or an unconfirmed identity, the ripple effects extend far beyond a returned mailer. They manifest as claims leakage, sophisticated fraud, and devastating regulatory penalties.

In the evolving landscape, a silent crisis is hollowing out the margins of even the most established carriers: inaccurate customer and address data.

For B2B companies providing SaaS, core systems, or Insurtech solutions, the mandate is clear: Verification is no longer a feature; it is a survival mechanism.

A policyholder moves to a riskier neighborhood but never updates their address. Claims process under old geographic assumptions. The carrier pays 5-7% more than warranted. Multiply this across thousands of claims monthly, and you're looking at $15-30 million annually leaking from a mid-sized insurer's bottom line.

As insurance distribution becomes increasingly digital and claims processes accelerate, the traditional assumptions around static, self-declared address data no longer hold.

Customers are more mobile, fraud networks more sophisticated, and regulators more demanding. In this environment, insurers that fail to modernize how they collect, verify, and govern customer and address data face measurable financial, operational, and reputational consequences.

This blog explores the three-headed hydra of claims leakage, sophisticated fraud, and regulatory fallout that stems from repository data, and how modern API-driven verification is the only viable shield.

What Is Data Inaccuracy in Insurance?

Data inaccuracy occurs when customer information including names, addresses, dates of birth, government IDs, contact numbers, or other identifying markers is incomplete, incorrect, outdated, or inconsistent across systems.

In insurance, this is especially problematic because every major function: underwriting, pricing, risk assessment, claims processing, and compliance relies on accurate customer and address data. If the foundation is flawed, the rest of the value chain tilts toward risk and inefficiency.

The Changing Role of Customer and Address Data in Insurance

Insurance today operates in a vastly different environment. Digital-first distribution has reduced face-to-face verification. Work-from-home, gig employment, and internal migration have made customer locations more fluid than ever before. At the same time, fraud has become more organized, data-driven, and legally sophisticated.

In this new reality, address data influences far more than logistics. It affects:

Risk pricing and underwriting assumptions

Claims admissibility and loss assessment

Fraud pattern detection and network analysis

Regulatory compliance and audit outcomes

What was once considered a static administrative input has evolved into a dynamic risk signal. Yet many insurers continue to manage it with legacy processes designed for a slower, more manual era.

Verification APIs such as digital address verification, geo-location validation, document-to-address matching, and recency checks are specifically designed to close this gap by attaching proof, context, and timestamps to address data.

Claims Leakage: How Inaccurate Data Erodes Profitability

Claims leakage is one of the most persistent and least visible threats to insurance profitability.

Unlike catastrophic losses or systemic underwriting errors, leakage does not announce itself through dramatic spikes.

When address data is inconsistent, outdated, or poorly validated, insurers lose their ability to enforce policy conditions accurately.

Geographic exclusions are misapplied.

Duplicate identities slip through de-duplication checks.

Loss assessors operate with incomplete context.

Each of these failures increases the likelihood that claims are approved at higher values than warranted or approved when they should not be paid at all.

One of the most common leakage scenarios involves duplicate or fragmented customer identities. Another frequent source of leakage arises from location-based risk assumptions.

Crucially, this form of leakage is rarely traced back to data quality failures. It appears instead as:

Marginally higher claim approval rates

Slightly elevated average claim values

Increased re-openings and adjustments

The Fraud Magnet: Synthetic Identities and Location Manipulation

Insurance fraud is evolving. In 2026, opportunistic fraud is being replaced by systematic, data-driven attacks. Inaccurate or unverified data creates the shadows where these fraudsters hide.

From claim denials due to simple address errors to massive fraud cases rooted in fake identities, data inaccuracies impact every stage of the insurance value chain from onboarding and underwriting to claims settlement and compliance reporting.

The Ghost Policyholder: Fraudsters use Synthetic Identity Fraud (SIF), combining real and fake data to open policies. By providing a slightly altered address or a non-residential mule address, they create a persona that bypasses traditional credit checks.

Once the policy is active, they stage paper accidents or thefts of non-existent assets.

Fraud Disputes: When Weak Data Undermines Defense

When claims are challenged, insurers must justify their decisions not just internally, but to customers, ombudsmen, courts, and regulators.

This is where inaccurate customer and address data becomes particularly dangerous.

Fraudulent claims increasingly involve post-issuance manipulation of customer details. Addresses are updated after losses occur. Claims are filed for locations that were never properly insured.

Synthetic identities are constructed using plausible but unverifiable address information. In many cases, the insurer suspects wrongdoing but lacks the documentary or system-level evidence required to prove it.

Weak address normalization amplifies organized frauds:

Multiple claims from same physical location appear unrelated due to formatting differences

Manual data entry creates variations fraudsters deliberately exploit

Claims matching systems can't correlate related losses when addresses don't normalize to identical strings

Fraud networks operate across 5-10 properties while each claim processes in isolation

As fraud becomes more sophisticated and disputes more adversarial, insurers must recognize a hard truth: data quality is no longer an operational concern, it is a legal strategy.

Those who invest in defensible, verifiable, and well-governed data foundations will not only reduce fraud losses. They will retain the ability to defend their decisions when it matters most.

Regulatory Fallout: From Data Gaps to Governance Failures

Regulatory scrutiny in insurance has intensified globally, with a growing emphasis on data integrity, customer protection, and process transparency. Regulators no longer accept intent or policy language alone, they expect insurers to demonstrate how decisions are made and supported by data.

Today’s insurance ecosystem is digital-first, API-driven, and increasingly remote. Policies are issued without physical interaction, claims are processed without site visits, and address updates are made through digital channels.

In response, regulators now expect insurers to demonstrate demonstrable diligence, not merely procedural compliance.

This means insurers must show:

How customer and address data was collected

What validation mechanisms were applied

When verification occurred relative to policy issuance or claim initiation

Whether the level of verification was proportionate to risk

How changes to customer data were governed and audited

Modern regulatory frameworks increasingly emphasize:

Data accuracy

Purpose limitation

Accountability

Auditability

Inaccurate customer and address data directly conflicts with these principles. If insurers cannot demonstrate that customer data is accurate, up to date, and collected with appropriate safeguards, they risk non-compliance not only with insurance regulations but also with broader data protection and consumer protection standards.

From a regulatory standpoint, poor data quality undermines:

Customer consent integrity

Fair treatment obligations

Transparency in decision-making

The regulatory risk associated with inaccurate address data compounds with scale.

Emerging Technology to Reduce Data Risk

Identity and Address Verification Platforms

These tools validate customer information against external trusted datasets in real time. Instead of trusting what the customer enters, insurers can:

Check addresses against official postal databases

Validate KYC documents via OCR and AI

Detect fake or manipulated IDs

Machine Learning and AI for Fraud Detection

Advanced systems can analyze patterns across billions of records to identify anomalies, much faster than humans alone. AI can:

Recognize signature fraud patterns

Compare behaviours across claims

Flag inconsistencies in customer data

This reduces false positives and allows insurers to detect fraud before payouts occur.

Data Lakes and Unified Customer Profiles

One of the most underestimated contributors to inaccurate customer and address data in insurance is data fragmentation. Most insurers today do not suffer from a lack of data, they suffer from too many disconnected versions of the same customer.

A customer may exist as:

One record in the sales or distribution system

Another version in the policy administration system

A third version in the claims management platform

Yet another in partner, broker, or TPAs’ systems

Each of these systems often captures slightly different customer details, particularly addresses, contact information, nominee details, and ID references. Over time, these inconsistencies compound into serious operational and regulatory risks.

Traditional insurance technology stacks evolved in silos:

Sales and onboarding systems were built first

Policy administration systems came next

Claims platforms evolved separately

Compliance and reporting tools were added later

Each system collected customer data independently, often with:

Different field formats

Different validation rules

Different update frequencies

As a result, insurers frequently face situations where:

The address used for underwriting differs from the address used for claims

The KYC-verified address doesn’t match the communication address

The address submitted during claim filing doesn’t match policy records

This fragmentation is one of the root causes of claim delays, disputes, and rejections.

Building a Defensible Data Foundation: A Strategic Imperative

In insurance, inaccurate customer and address data doesn’t show up as a small operational issue. It shows up later as claims leakage, fraud disputes, delayed settlements, and regulatory exposure.

We see this repeatedly across insurers where data is collected once at onboarding, validated late, and rarely re-verified. By the time a claim is raised, discrepancies are already embedded into the policy lifecycle.

The problem isn’t lack of intent or diligence. It’s that manual and document-heavy verification models were never designed for digital-first insurance at scale.

Self-declared addresses

Static KYC documents

Post-issuance checks

It leaves too much room for error, especially in group insurance, embedded insurance, and high-volume retail products.

This is where verification APIs shift from being a backend utility to a core risk control. Real-time customer and address verification APIs allow insurers to validate data at the point of onboarding and continuously thereafter, instead of discovering mismatches during claims or audits.

At TartanHQ, these customer verification APIs are already used by insurers to reduce claims leakage, limit fraud exposure, and shorten claim resolution cycles. The value isn’t in adding more checks, it's in ensuring that every underwriting, servicing, and claims decision is backed by verifiable, source-linked data.

For insurers, the takeaway is straightforward: data accuracy is now a risk and compliance strategy, not an operations task. A defensible data foundation built on real-time, consent-led verification APIs reduces leakage, limits fraud exposure, and stands up to regulatory scrutiny.

In an environment where every claim and customer decision must be explainable, verified data is what allows insurers to operate with confidence, not assumptions.

Tartan helps teams integrate, enrich, and validate critical customer data across workflows, not as a one-off step but as an infrastructure layer.